Content Transformations: Adapting Information to Your Needs

P.S. Lab Notes are written for and organized by Persona Types👤 persona types – we wanted to sort our content by the way people think and not across topics, because most topics are beneficial for people of all different backgrounds in product building. Our method allows for readers to hone in on what suits them, e.g. via perspective, lessons learned, or tangible actions to take. .

I consume a lot of content, especially in preparation for our Pipette issues, and the process of learning itself has been on my mind recently. Specifically, how we prefer to learn, the types of content we consume, and some content transformations – ways to transform that content into different formats.

Learning Styles

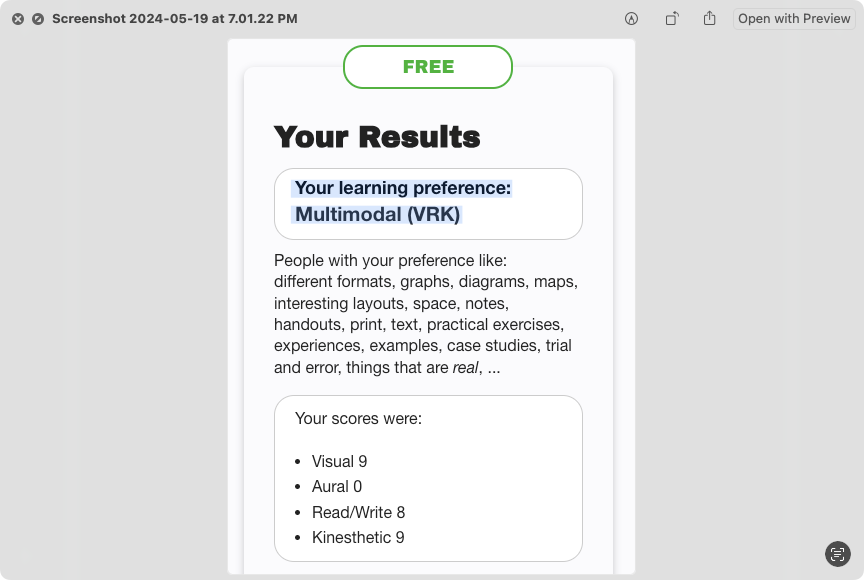

Before writing this article, I would have described my learning style as a visual learner. I’m constantly drawn to charts and diagrams when learning a new concept, and if they’re not available, I tend to make them myself. But I wanted to check for sure (for science?!), so I took an online quiz. It scores learning preference (not aptitude!) in 4 categories:

- Visual

Preference for visual elements like maps, plans, charts, and diagrams. - Aural

Preference for sound-based learning: listening/hearing, engaging in conversation with others. - Text-based

Preference for written lists, text, and notes, whether physical or digital. - Kinesthetic (Tactile in some other quizzes)

Preference for trying things physically and being hands-on. Also, practical examples of a concept.

Have a minute to spare? Take the quiz from VARK Learn and check out their page on how to understand the results. Some great bits about active learning in there, along with other exploration points. Once you’re done, let us know what you got via our feedback form!

Learning Style Results, Our Relationship to Content Types

I was pretty surprised to see that I scored as a “multimodal” learner (2+ learning styles), with a high preference for everything but auditory (aural). But according to the research from VARK, over 65% of learners prefer 2+ modes.

On the visual side, I tend to recall concepts especially well if there is a graph, diagram, chart, or map associated with it. Something about the spatial aspect of them tends to really burn the idea into my brain, and I can remember them years later.

The human relationship to spatial learning is pretty interesting too – in Joshua Foer’s Moonwalking with Einstein, he explores a technique called “memory palaces”, where people will construct complex mental spaces to store incredible amounts of information. Furthermore, he points to a study of London taxi drivers proving that geospatial knowledge can physically change our brain’s structure. The hippocampus (responsible for spatial navigation) was 7% larger in cabbies compared to the norm. This difference is attributed to a test they must pass called The Knowledge, which covers 25,000 streets and their points of interest.

You can tell that I get pretty amped about visual content. On the flip side, listening is probably the way I absorb the least (hence my low score there). But despite my learning preference, listening has some specific advantages compared to other learning modes. For example, the ability to be hands-free and do chores, or go on a walk while I learn.

Pros and Cons of Different Formats

What about the rest of the learning styles (and associated content types)? Let’s look at their properties and some of the benefits and constraints they have.

- I can look up any word or phrase in a document instantly

- You can copy/paste pieces of text anywhere

- Need to have eyes on the text

- Not hands-free

- Gets you away from devices

- Some authors are not available digitally, which gives you access to a different pool of information

- You can highlight & take notes in the margins (of your books 🙂)

- On the writing front, there’s evidence that physically taking notes reinforces the material in a way that typing them does not.

- Need to have the physical object on you

- Not hands-free

- Multi-modal by default: Information is presented visually, often with an audio component, and sometimes text is shown too. Checks a lot of boxes!

- You can speed the video up or down

- Easy to link to a specific portion

- Need to have eyeballs on the content

- Hands-free! Can listen or talk while driving, walking my dog, etc.

- No ability to search for something specific

- More challenging to reference, and recall facts afterward

- More difficult to share specific portions with others

Reasons for Transforming Content

As we covered, each content type has unique properties and could be less suited to you in the moment or in general. For example:

- Environmental Issues: Something isn’t meshing with your current or future environment. E.g. – you need to walk your dog but also want to learn about memory palaces.

- Reinforcing Learning: You want to gain a different perspective, learn in a different mode to solidify your experience, or because that’s how you prefer to learn.

- Accessibility: There’s a barrier due to the nature of the format itself.

Can you think of other situations where you’ve wanted material in a different format? Let us know using the feedback form!

Knowing our learning style preferences means we can optimize for it.

For example, you may find yourself interacting with material that is not in your preferred learning style. However, you can use the web to search for it in a different format. Regardless of your preferences, it may also be interesting to take something you already have and look at it differently. Enter content transformations.

Content Transformations

You may already use some common content transformations such as finding audiobooks in place of books and reading transcripts in place of podcasts and videos (YouTube videos have searchable transcripts).

However, there are more techniques that I have found useful and less common, like leveraging accessibility tools, AI, and other apps. Let’s explore some.

Less obvious transformations:

- Webpage → Read aloud via accessibility assistant (have your phone read it to you)

- Image → text – (Google Lens, Mac Preview, etc)

- Conversation → text by dictating to your computer (instructions for Mac) or phone.

- Tip for Kinesthetic people: set up a playground, a scenario, or something practical to represent the situation you’re studying

Using AI:

- Text Document (PDF too) → conversation: Upload your document to your favorite chatbot. As of now, ChatGPT and Claude.ai both support uploading documents as added context.

Let’s expand on some of these a bit more.

How to: Image to Text

Depending on the platform you use, instructions will vary slightly. But the gist is that you can take any image with text in it and use an app or website to extract its text. Under the hood, OCR (optical character recognition) is used.

If you want to use a website, OnlineOCR.net is spartan but gets the job done. I’ve used it plenty of times over the years. Now there are more native integrations that you can use without having to open a browser.

For example, on Macs, you can use Preview to select text from an image.

- Find the image in Finder

- With it selected, press Space to bring up its preview

- Now, click and drag over any text in the image

- Or click the icon in the bottom right to show all discovered text

- Click and drag to select any text. You can copy it into your clipboard

Prefer to use your phone? Here are the instructions for native phone apps: Google Lens (Android), and Live Text (iOS).

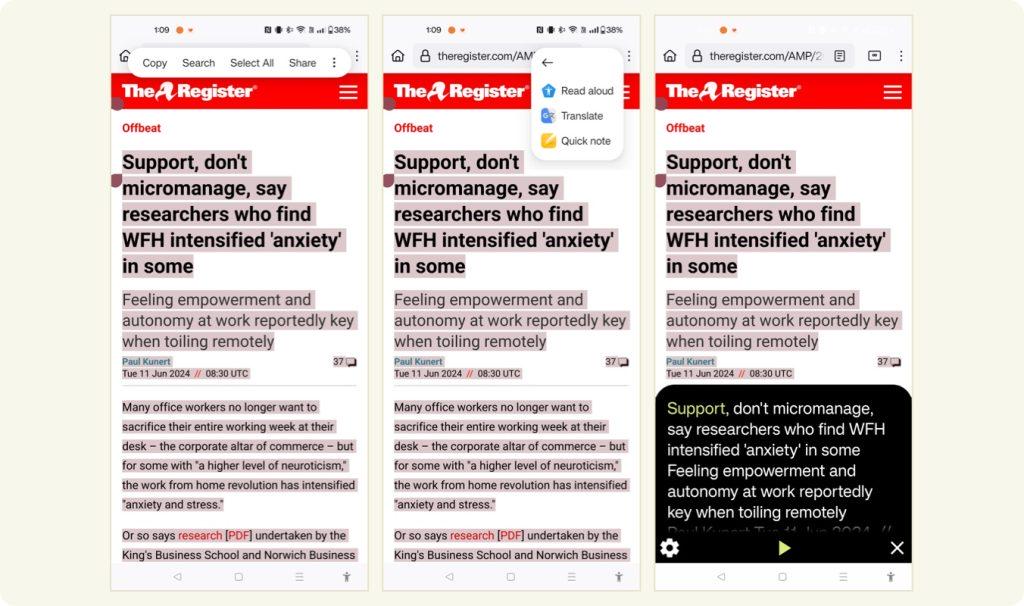

How to: Read a webpage with accessibility tools

I’ll show how I do this on Android and provide some instructions for other platforms too.

- Long press any text

- Tap Select All (adjust the top selection to exclude the site name etc if that’s highlighted)

- Tap the three-dot menu, then Read Aloud

- Your phone will start reading the text. You can press pause or adjust speed with the settings menu.

For iOS, see these instructions.